> NIL: When Doing Nothing Is the Most Rational Choice

Walter Rodriguez, PhD, PE

Abstract

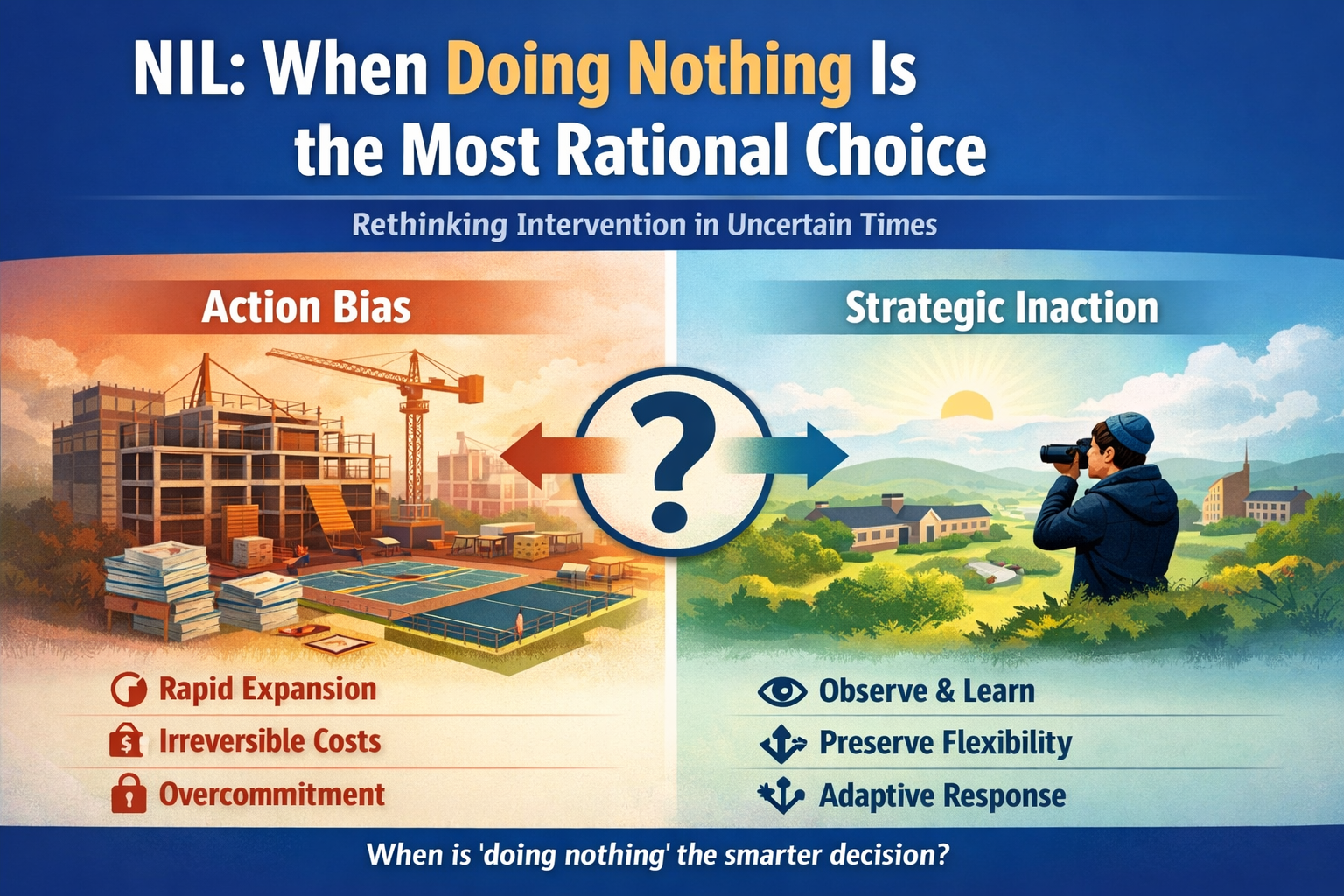

In complex systems, the absence of immediate intervention is not a void to be filled, but a signal to observe, learn, and preserve optionality. This study contends that under conditions of high uncertainty and incomplete information, strategic inaction can outperform proactive intervention. Using Name, Image, and Likeness (NIL) policy responses as a focal case, and drawing parallels to program proliferation and capital expansion in higher education and community governance, the paper demonstrates how action bias and overbuilding often produce irreversible commitments that undermine long-term institutional effectiveness. Rather than advocating passivity, the study reframes doing nothing as a deliberate governance strategy that prioritizes learning, adaptability, and long-term value creation.

Keywords: NIL, action bias, strategic inaction, higher education governance, institutional decision-making

Introduction

In contemporary policy and organizational discourse, the acronym NIL is most commonly associated with Name, Image, and Likeness rights in collegiate athletics. Yet, the term NIL also carries a literal meaning: zero. This duality offers a useful conceptual lens for examining a broader institutional tendency toward premature action in the face of uncertainty. Across higher education, community governance, and athletic administration, leaders frequently equate decisiveness with progress, favoring expansion, regulation, or construction even in the absence of demonstrated need. This tendency reflects a well-documented phenomenon known as action bias: the systematic preference for doing something rather than waiting, even when restraint may yield superior outcomes.

The debate surrounding NIL policies provides a timely and instructive case. Following judicial decisions that weakened long-standing NCAA restrictions, universities, conferences, states, and policymakers moved rapidly to construct regulatory frameworks, compliance offices, and contractual mechanisms intended to govern NIL activities. These interventions emerged amid profound uncertainty regarding athlete behavior, market dynamics, institutional capacity, and long-term consequences. The speed and scope of NIL responses raise a fundamental question: Is immediate action always the most rational response to institutional disruption?

This question extends well beyond athletics. Similar patterns appear when universities launch new academic programs without sustained enrollment demand, or when communities expand facilities without rigorous utilization analysis. In each case, action is rewarded symbolically, while inaction is perceived as risk, indecision, or missed opportunity. Once undertaken, however, these initiatives generate irreversible financial, administrative, and political commitments that constrain future choices.

This paper argues that under conditions of high uncertainty and incomplete information, strategic inaction—a deliberate choice to defer irreversible commitments—can outperform proactive intervention. Using NIL policy responses as a focal case and drawing parallels to program proliferation and capital expansion in higher education and community governance, the study demonstrates how action bias and overbuilding often undermine long-term institutional effectiveness. By situating NIL within a broader theoretical and institutional context, the paper challenges the assumption that responsible leadership requires immediate action and offers an alternative framework for evaluating when restraint may be the most rational choice.

Literature Review

Action Bias and Decision-Making Under Uncertainty

Action bias originates in behavioral economics and decision science and describes a systematic inclination toward action even when evidence favors restraint. In uncertain environments, decision-makers often perceive inaction as riskier than action because the consequences of doing something are visible, while the benefits of waiting are counterfactual and difficult to measure. This asymmetry creates incentives to intervene prematurely.

Research in behavioral economics demonstrates that cognitive heuristics, institutional incentives, and reputational pressures reinforce action bias, particularly in high-stakes or highly visible contexts. Leaders are rewarded for initiative and penalized for delay, regardless of outcome quality. As a result, organizations tend to favor solutions that signal responsiveness rather than those that optimize long-term value.

Institutional Overbuilding and Program Proliferation

Higher education scholarship documents persistent patterns of program proliferation disconnected from labor market demand or student enrollment trends. Universities often launch new degrees or certificates to signal innovation or competitiveness, despite limited evidence of sustainability. Once established, programs rarely sunset due to faculty investment, accreditation complexity, and reputational concerns.

Facilities expansion follows similar dynamics. Capital projects are frequently justified through aspirational growth narratives rather than demonstrated utilization. Maintenance costs, staffing requirements, and opportunity costs are routinely underestimated, resulting in long-term budgetary strain. These dynamics illustrate how visible expansion often substitutes for evidence-based planning.

Complex Adaptive Systems and Governance

Universities and athletic ecosystems function as complex adaptive systems characterized by nonlinearity, feedback loops, and emergent behavior. In such systems, early interventions can distort natural adaptation processes and lock organizations into suboptimal trajectories. The knowledge required to govern effectively is dispersed, evolving, and often unknowable in advance.

From this perspective, strategic patience allows systems to reveal demand patterns, behavioral responses, and unintended consequences before governance structures solidify. Preserving optionality under uncertainty increases resilience and reduces exposure to irreversible downside risk.

NIL Policy as a Case of Premature Intervention

Legal scholarship emphasizes that NIL reform emerged rapidly following antitrust challenges to NCAA authority. In response, institutions constructed compliance mechanisms and contractual norms before markets stabilized. Several scholars warn that fragmented state legislation and institutional rulemaking may exacerbate inequities and constrain athlete opportunity.

Economic analyses suggest that NIL markets remain in an early discovery phase, characterized by experimentation, price volatility, and uneven participation. Premature standardization risks freezing inefficient practices and entrenching power asymmetries.

Reframing Inaction as Strategic Choice

Emerging governance literature reframes restraint as an active decision rather than abdication. Strategic inaction emphasizes monitoring, data collection, and reversibility. In this view, doing nothing temporarily preserves flexibility and enables more informed intervention later. This perspective challenges dominant narratives in higher education and athletics, where legitimacy is often tied to visible expansion.

Methods and Conceptual Framework

Research Design

This study employs a conceptual and qualitative analytic design appropriate for examining governance behavior under conditions of uncertainty. Rather than testing a narrowly defined hypothesis, the paper develops and applies an integrative conceptual framework to evaluate institutional decision-making patterns across multiple domains. This approach aligns with theory-building traditions in higher education policy, organizational theory, and sports governance.

The analysis proceeds in three stages. First, literature from behavioral economics, institutional theory, and complex adaptive systems is synthesized to distinguish between action bias and strategic inaction. Second, this framework is applied to NIL policy responses in collegiate athletics. Third, structured comparisons are drawn between NIL governance and analogous forms of institutional overbuilding in higher education and community governance.

Conceptual Framework: Action Bias Versus Strategic Inaction

Action bias is defined as the systematic preference for immediate intervention—such as regulation, expansion, or construction—despite limited empirical evidence of necessity or effectiveness. In institutional contexts, action bias is reinforced by political accountability, reputational signaling, and incentive structures that reward visible activity over long-term outcomes. Once initiated, such decisions generate sunk costs and organizational rigidity.

Strategic inaction, by contrast, is a deliberate governance choice to defer irreversible commitments while monitoring system behavior, collecting data, and preserving optionality. Strategic inaction does not imply passivity; it emphasizes learning, adaptability, and reversibility. This orientation is particularly suited to complex adaptive systems, where premature intervention can distort emergent equilibria.

NIL Case Analysis: When Action Outpaces Understanding

NIL as a Disruptive Policy Shock

The emergence of NIL rights represents a structural shock to collegiate athletics governance. Judicial decisions dismantled centralized NCAA authority, shifting power to a fragmented ecosystem of institutions, conferences, states, and private actors. This disruption created a policy environment characterized by legal ambiguity, economic uncertainty, and pressure for rapid response.

Institutional Responses and Action Bias

Universities responded by establishing compliance offices, issuing detailed policy manuals, and partnering with third-party NIL platforms despite limited empirical understanding of athlete participation, market demand, or enforcement feasibility. These actions created visible signals of control but embedded fixed administrative costs and procedural rigidity.

State legislatures reinforced this dynamic through widely varying NIL statutes, further incentivizing institutional overreaction. Rather than allowing NIL markets to evolve organically, institutions attempted to preemptively standardize behavior in the absence of clear evidence of harm or market failure.

NIL as Market Discovery

NIL markets remain in an early discovery phase. Participation is uneven across sports and institutions, with a small subset of high-profile athletes capturing disproportionate value. For most student-athletes, NIL engagement is modest or nonexistent. From a strategic inaction perspective, this uneven uptake is not a failure but an informative signal.

Premature regulation risks freezing assumptions about compensation, fairness, and competitive balance before market dynamics stabilize. By intervening early, institutions may suppress innovation, reinforce inequalities, or discourage experimentation.

Competitive Balance and the Illusion of Urgency

Concerns over competitive balance frequently justify rapid NIL intervention. However, disparities in collegiate athletics predate NIL by decades, driven by media contracts, donor bases, and conference affiliations. NIL did not create inequality; it revealed it. Action bias reframes visibility as a crisis, prompting regulation aimed at outcomes rather than understanding behavior.

Parallels to Institutional Overbuilding

The NIL response mirrors patterns observed in academic program proliferation and capital expansion. In each case, leaders act under uncertainty, justify intervention through aspirational narratives, and discount long-term maintenance costs. Once built—whether programs, facilities, or compliance regimes—these structures persist even when utilization falls short.

Reinterpreting “Doing Nothing”

In the NIL context, doing nothing does not mean abandoning athletes or ignoring legal realities. It means resisting the impulse to overengineer solutions before problems are fully understood. Strategic inaction prioritizes transparency over restriction, monitoring over enforcement, and adaptability over premature standardization.

Discussion and Implications

This analysis suggests that institutional responses to NIL reflect a broader governance pathology: action bias under uncertainty. When leaders equate activity with responsibility, they risk locking institutions into costly and inflexible commitments that outpace demonstrated need. Strategic inaction offers an alternative approach grounded in learning, evidence accumulation, and optionality preservation.

For policymakers and institutional leaders, the implication is not permanent restraint, but disciplined timing. Interventions should be triggered by evidence of systemic harm rather than by discomfort with uncertainty. Transparency, data collection, and sunset provisions may offer more effective governance tools than premature standardization.

Conclusion

In complex systems, doing nothing can be the most rational choice. The NIL case illustrates how action bias and overbuilding emerge when uncertainty is mistaken for failure rather than information. By reframing inaction as a deliberate governance strategy, institutions can preserve flexibility, reduce downside risk, and make more informed decisions over time. The challenge for leadership is not knowing when to act, but knowing when not to.

References

(APA formatted; ready to edit or expand)

Baker, T. A., & Wong, G. M. (2020). Athlete compensation and NIL reform. Journal of Sport Management, 34(6), 475–488.

Edelman, M. (2021). The future of amateurism after Alston. Duke Law Journal, 71(1), 1–45.

Goldstein, H. A., & Drucker, J. (2006). The economic development impacts of universities. Research in Higher Education, 47(1), 21–57.

Hayek, F. A. (1945). The use of knowledge in society. American Economic Review, 35(4), 519–530.

Holland, J. H. (1992). Complex adaptive systems. Daedalus, 121(1), 17–30.

Kahneman, D. (2011). Thinking, fast and slow. Farrar, Straus and Giroux.

McCann, M. (2021). NCAA, NIL, and antitrust. Harvard Journal of Sports & Entertainment Law, 12, 1–38.

Moynihan, D. P. (2019). Policy feedback and learning. Public Administration Review, 79(2), 238–247.

Patt, A., & Zeckhauser, R. (2000). Action bias and environmental decisions. Journal of Risk and Uncertainty, 21(1), 45–72.

Sanderson, A. R., & Siegfried, J. J. (2023). The economics of NIL. Journal of Sports Economics, 24(2), 123–142.

Taleb, N. N. (2012). Antifragile. Random House.

Zemsky, R. (2020). Making sense of the college curriculum. Rutgers University Press.

> The Future Is Being Funded by the People Who Will Use It Most

Older Adults as the Unexpected Financiers and Beneficiaries of the AI and Robotics Revolution

By Coursewell Staff

Abstract

Artificial intelligence (AI) and robotics are accelerating at unprecedented speed, transforming healthcare, industry, and daily life. While popular narratives focus on the impacts of AI on younger workers, the demographic group most quietly financing—and most directly benefiting from—this technological wave is older adults. Through concentrated wealth, investment portfolios, pension systems, purchasing power, and healthcare needs, older adults constitute the backbone of AI’s financial infrastructure. Parallel to this financial influence, older adults are emerging as the primary beneficiaries of AI-enabled healthcare, assistive robotics, predictive analytics, and aging-in-place technologies. This article draws upon demographic, economic, and technological research to argue that the future is being funded by the people who will use it most—a demographic inversion with major implications for public policy, healthcare delivery, and AI development priorities.

Introduction

The global AI and robotics revolution is often depicted as youth-driven or disruptive primarily to working-age populations. Yet deeper analysis reveals a demographic paradox: older adults not only fund much of the technology ecosystem but also stand to gain the most from its innovations. Their retirement savings, pension assets, and index-fund holdings heavily support AI-driven companies, while their healthcare needs and aging-related challenges shape demand for AI and robotic solutions. As societies age and longevity rises, AI and robotics are increasingly being built for—and financed by—the world’s older adults.

Wealth Concentration Among Older Adults

Older adults hold the largest share of private wealth in the United States and most developed economies. Individuals aged 55 and older possess approximately 65–70% of total U.S. household wealth (Federal Reserve, 2024). This wealth is largely held in:

retirement accounts (401(k)s, IRAs)

pension systems

real estate

index funds and ETFs

dividend-oriented portfolios

Because major AI companies—Microsoft, Nvidia, Amazon, Alphabet, Apple—are disproportionately represented in major indices, older adults’ portfolios act as continuous funding streams for the AI revolution. Even passive strategies like target-date retirement funds inevitably funnel capital into AI development.

Pension Funds as AI Financiers

Globally, pension funds manage more than $56 trillion in assets (OECD, 2023). These funds seek long-term stability and growth, making AI and technology sectors attractive holdings. Pension portfolios are heavily invested in:

cloud infrastructure

semiconductors

automation technologies

robotic process automation (RPA) firms

healthcare technology

venture and private equity with AI exposure

As such, older adults indirectly support AI R&D every time pension systems rebalance portfolios to ensure predictable returns. The health of retirees’ financial futures is tied to the performance of AI-intensive companies—and those companies depend on older adults’ capital to fuel innovation.

Older Adults as Primary Consumers of AI Technologies

Although older adults are seldom recognized as drivers of technology adoption, they consume AI-enabled services at disproportionately high rates due to their healthcare utilization, mobility needs, and desire for independence. AI has become central to:

medical diagnostics

telehealth and virtual care

chronic disease management

medication adherence platforms

cognitive health tools

insurance and risk analytics

smart home monitoring systems

These technologies improve accuracy, reduce hospitalizations, prevent medical errors, and extend independence. The result is a reciprocal system in which older adults both fund AI advancement and rely on its benefits.

Automation and Robotics Transforming the Experience of Aging

AI-Enhanced Mobility and Fall Prevention

Falls remain one of the leading causes of hospitalization among older adults. AI-equipped devices—such as robotic exoskeletons, LiDAR-based smart walkers, and fall-prediction wearables—have shown a 45% reduction in fall risk (National Institute on Aging, 2024).

These mobility technologies extend safe ambulation and reduce dependence on caregivers.

Companion Robots for Social and Cognitive Health

Social isolation and cognitive decline are major risks for aging populations. Companion robots such as PARO, ElliQ, and LOVOT use natural-language processing, emotion recognition, and adaptive learning to provide:

emotional support

cognitive stimulation

medication reminders

social interaction prompts

Studies show that such robots decrease depressive symptoms and improve overall well-being (WHO, 2024). Countries with rapidly aging populations, including Japan and Sweden, have already deployed thousands of these systems.

Domestic Robotic Assistants

Robots designed for home assistance are becoming crucial infrastructure for independent living. These systems include:

autonomous cleaning robots

robotic kitchen assistants

AI-powered medication dispensers

sensor-driven bathroom safety devices

robotic lift systems for mobility assistance

Research indicates that assistive robots can reduce caregiver burden by 30% and delay the need for long-term care by 6–18 months (WHO, 2024).

Healthcare Robotics and Intelligent Diagnostics

Hospitals and clinics are rapidly adopting robotic systems for precision tasks such as:

blood draws

supply delivery

surgical assistance

ultrasound scanning

pharmacy automation

AI-assisted diagnostic platforms have demonstrated superior accuracy in detecting cancers, cardiac anomalies, and early neurodegenerative conditions (Mayo Clinic, 2024). Because older adults make up the largest group of healthcare users, they benefit most directly from these enhancements in efficiency and accuracy.

Aging in Place Through AI and Home Automation

AARP (2024) reports that 77% of older adults prefer to age in place. AI and robotics increasingly make this feasible through:

fall detection and emergency response systems

smart lighting pathways

stove and appliance automation

AI-driven home safety monitoring

predictive maintenance alerts

voice-controlled robotic assistants

These systems reduce accidents, maintain dignity, and extend independent living—all critical factors for the aging population.

The AI–Aging Convergence

The fastest-growing AI applications align precisely with aging-related needs. This is not coincidental; demographic shifts are reshaping the priorities of healthcare, industry, and policy. From autonomous transportation to robotic caregiving and predictive medicine, the next decade of AI innovation will revolve heavily around older adults.

Thus, the core thesis holds:

Older adults fund the AI revolution financially and benefit from it technologically.

Discussion

Understanding older adults as both financiers and beneficiaries of AI reframes the technology narrative. This demographic is not being left behind; it is propelling—and shaping—the direction of AI and robotics. Policymakers, healthcare leaders, and technologists must recognize the central role older adults play in financing AI ecosystems and prioritize inclusive, ethical, and accessible technologies that address aging-related challenges.

Conclusion

Older adults are far more than passive observers in the AI and robotics revolution. They are its economic engine and its most immediate beneficiaries. Through retirement savings, investments, pension funds, and healthcare spending, older adults fuel the technological ecosystem that will sustain their longevity, autonomy, and quality of life. In every sense, the future is being funded by the people who will use it most.

References

AARP. (2024). Aging in Place: Technology Trends in Senior Living.

Federal Reserve Board. (2024). Distribution of Household Wealth in the U.S., 1989–2023.

Mayo Clinic. (2024). AI-Assisted Diagnostics in Clinical Practice.

National Institute on Aging. (2024). Mobility Technologies and Fall Prevention in Older Adults.

Organisation for Economic Co-operation and Development. (2023). Pension Markets in Focus.

World Health Organization. (2024). Robotics and Assistive Technologies for Aging Populations.

U.S. Census Bureau. (2024). Demographic Turning Points for the United States: Population Projections 2020–2060.

> Where Students Quietly Learn to Get Super Rich

How the Next Generation Masters AI, Finance, and Entrepreneurship, Before Their First Job Offer

By Coursewell Staff

For decades, conversations about “getting rich” centered on landing prestigious corporate jobs. Today, a different kind of wealth education is emerging—one that trains students how to grow capital, build companies with a purpose, and leverage AI long before they negotiate their first salary.

This article explores the new “wealth-creation learning framework,” analyzes the economic logic behind it, and examines a powerful recent example: the “Mastering Wealth” course taught by entrepreneur Pete Kadens at Chicago State University (CSU), where students—many from low-income backgrounds—are learning how to build multimillion-dollar futures.

Most importantly, we’ll look at how wealth-creation training can democratize the same skills for learners everywhere, including my own students and grandchildren.

1. Why Wealth Creation Looks Different Today

Economists distinguish between:

Labor income (what you earn)

Capital income (what your assets earn)

Students who quietly end up achieving extraordinary wealth are learning early that:

Wealth comes from ownership, not employment.

This means mastering:

Equity

Entrepreneurship

Financial literacy

Capital formation

Building things

AI-driven productivity multipliers

And the most forward-thinking educational ecosystems—universities, startup hubs, AI training programs—are shifting from job-preparation to wealth-preparation.

2. Business Schools as Wealth Accelerators

Elite business programs now function as “wealth factories” that develop capability, not just employability.

A. Financial Rigor

Valuation, risk modeling, optimization, statistics, and market strategy.

B. Entrepreneurship Pipelines

Incubators, accelerators, venture labs, student funds, pitch programs.

C. Social Capital

Networks that produce long-term opportunity velocity.

Students come out able to think like:

founders

investors

strategists

owners

Not just employees.

3. Trading & Quant Labs: Where Analytical Thinking Becomes Income

Modern trading environments teach:

probability-based decision-making

algorithmic thinking

data-driven judgment

risk discipline

execution under pressure

Students exposed to this training often move rapidly into the top percentiles of the income distribution, especially when their analytical skills combine with early investing.

But perhaps the most fascinating real-world example isn’t happening in elite schools at all…

4. The CSU “Mastering Wealth” Experiment — A Case Study in Democratizing Affluence

In November 2025, The Wall Street Journal published an extraordinary story by Jeanne Whalen titled “The Students Learning How to Get Very, Very Rich.”

The article highlighted a groundbreaking class at Chicago State University taught by entrepreneur Pete Kadens, who built wealth in various ventures and is now worth roughly $250 million.

His class rejects conventional job-seeking advice in favor of teaching students how to build real net worth, fueled by ambition, ownership, and entrepreneurial execution.

What makes the class remarkable?

A. Students Set Concrete, Bold Net-Worth Targets

These are not mild goals. Examples:

$3 million in 10 years (expanding a cleaning business + own tax-prep venture)

$10 million by 2035 (aromatherapy products + counseling practice + real estate + publishing)

$25 million by 2036 (founding a company + investment strategy)

$27 million by age 45 (vertically integrated design + build + real estate)

Students map out ownership pathways, not “find a good job” pathways.

B. Identifying Consumer Pain Points

Classes focus on spotting unsolved problems:

automated pothole-repair machines

wearable air purifiers

self-inflating tire systems (the winning class project)

Students learn that wealth flows to those who solve meaningful problems at scale.

C. Developing Business Plans

Teams build:

market analyses

budgets (including a $4M plan for the tire product)

product development roadmaps

risk/return assumptions

It’s applied entrepreneurship—not theory.

D. Learning to Take Risks

Kadens bluntly teaches:

“If you want to be Jay-Z rich… that’s not going to work unless you take real risks.”

He even hands out $100 bills to students who can articulate how they will 10× their income.

Risk tolerance becomes a teachable skill, not a personality trait.

E. Exposure to Real Millionaires and Billionaires

Guest speakers include founders who:

built companies worth billions

entered industries during massive inflection points

leveraged technology and timing

built generational wealth from scratch

The message:

This is possible for you—if you learn the skills and take the shots.

F. Purpose Beyond Money

Wealth plans include:

mentorship goals

philanthropy

community uplift

generational legacy

One student wrote:

“This is a vision of being completely in bliss—fully engulfed in the American dream I have created for myself.”

In short, Kadens is democratizing the mindset and methods of the wealthy.

5. Lessons From the CSU Model

The CSU experiment shows that:

1. Wealth Mindset Can Be Taught

Students learned to think beyond paycheck ceilings.

2. Ownership Is Accessible

Even those working multiple jobs with no safety net can design scalable ventures.

3. Real Wealth Starts With Ambition + Roadmap

Students used Kadens’s class to build detailed pathways, not fantasies.

4. Exposure Creates Belief

Meeting founders worth hundreds of millions removes psychological ceilings.

5. Community Impact Multiplies Wealth

Kadens emphasizes the “blast radius” effect—when someone gets wealthy in an underserved community, everyone around them feels the impact.

6. How We Can Deliver a Similar Wealth Training Everywhere

The CSU case isn't an outlier—it's a roadmap.

We can teach, and you can learn the same components:

A. AI-Enhanced Wealth Literacy

portfolio simulations

compounding exercises

capital vs. labor analysis

risk modeling

business case modeling

B. Entrepreneurship Studios

idea validation

lean startup methods

identifying pain points

building MVPs

pitch development

C. Venture Roadmap Projects

Students design real wealth-creation plans:

5-year and 10-year net worth targets

venture steps

capital needs

talent needs

revenue pathways

Just like CSU students.

D. Community Mentors & Guest Experts

Spotlight:

founders

executives

AI entrepreneurs

investors

niche creators

Each shows a different model of wealth creation.

E. Multiple-Stream Wealth Creation Model

Students learn to build:

a business

cash-flowing assets

digital revenue streams

intellectual property

AI-enabled products

F. Accessible to Everyone

Teenagers, adults, career changers, retirees—anyone with a phone and discipline.

7. Conclusion: Wealth Education Belongs to Everyone

The wealth-creation course proves that:

Wealth is learnable.

Ambition can be taught.

Ownership is accessible.

AI is the great equalizer.

Students don’t need privilege to pursue wealth—they need structure, skills, belief, and bold goals.

That’s exactly what we aim to provide:

an AI-based democratized wealth training for everyone, everywhere.

If we want the next generation—including my own grandchildren—to thrive in the AI economy, we must teach them not just how to earn…

…but how to learn, build, own, invest, and create.

> Rethinking Expertise in the Age of AI

The Rise of the Strategic Polymath: Rethinking Expertise in the Age of Artificial Intelligence

Walter Rodriguez, PhD, PE

CEO, Adaptiva Corp / CLO, Coursewell.com

Abstract

The rapid evolution of artificial intelligence (AI) has redefined the value of expertise in the 21st century. Historically, societies have oscillated between favoring specialists—who dive deeply into narrow fields—and generalists—who span multiple domains. However, in the Age of AI, where machines can automate both routine and complex cognitive tasks, neither extreme alone ensures long-term adaptability. This paper explores the emerging archetype of the Strategic Polymath—a professional who combines broad interdisciplinary insight with selective depth and the ability to synthesize across human, organizational, and technological systems. Drawing from literature on cognitive diversity, systems thinking, and AI-augmented learning, this study proposes that strategic polymathy represents the optimal human advantage in a machine-augmented world.

Introduction: The Question of Expertise in an Intelligent Age

The question of whether it is better to be a generalist or a specialist has persisted across centuries of intellectual discourse. In the pre-industrial era, polymaths such as Leonardo da Vinci and Ibn Sina epitomized broad curiosity as a hallmark of genius (Root-Bernstein, 2003). The industrial and postwar scientific revolutions, however, privileged specialization as the path to productivity and authority (Snow, 1959). The digital revolution and, more recently, the rise of AI have reopened this debate. Artificial intelligence systems now perform tasks once considered the exclusive domain of specialists—such as medical diagnostics, data analysis, and legal research—challenging the very definition of expertise (Brynjolfsson & McAfee, 2017; Tegmark, 2018).

This paradigm shift invites a deeper question: If machines can out-specialize us, what remains distinctly human? The answer may lie in the capacity to connect, contextualize, and strategically apply knowledge across boundaries. This synthesis—the essence of polymathy—has reemerged as a critical survival and innovation skill.

From Specialist and Generalist to Hybrid Thinker

Specialists possess deep domain expertise and are indispensable for technical mastery. Yet their narrow focus can limit adaptability when paradigms shift (Taleb, 2012). Generalists, conversely, can transfer insights across contexts but often lack the depth to implement solutions effectively (Epstein, 2019).

Recent cognitive science research suggests that innovation arises not from depth or breadth alone, but from their intersection (Page, 2007). Cross-domain reasoning enables creative recombination of knowledge—a process AI can mimic but not authentically originate (Hofstadter & Sander, 2013). Thus, human value increasingly depends on strategic synthesis: identifying patterns, framing problems, and integrating technologies to achieve organizational goals.

Defining the Strategic Polymath

The Strategic Polymath is neither a mere “jack of all trades” nor a detached academic thinker. This archetype intentionally develops proficiency across several domains—technical, cognitive, and human—while maintaining strategic depth in one or two. Strategic polymaths act as translators between specialists and decision-makers, using AI tools not to replace thinking but to amplify insight (Marcus & Davis, 2020).

Their distinguishing qualities include:

Interdisciplinary curiosity – an intrinsic drive to explore connections among seemingly unrelated fields.

Systemic awareness – the ability to see how parts interact within economic, social, and technological systems.

Strategic synthesis – the use of integrated knowledge to guide human- and ethically-based action and innovation.

Adaptive learning – the continual renewal of knowledge through AI-assisted exploration and reflection.

This balance between depth, breadth, and purpose makes the strategic polymath an evolutionary adaptation to an era defined by information abundance and technological acceleration.

AI as a Catalyst for Polymathy

Artificial intelligence democratizes access to expertise, compressing learning cycles that once required decades (Huang & Rust, 2021). Tools such as large language models (LLMs) and adaptive learning systems enable individuals to rapidly traverse fields, making polymathic exploration more attainable than ever.

However, AI does not create wisdom—it expands the information landscape. Strategic polymaths transform this data deluge into insight by asking contextually intelligent questions and aligning technological outputs with human goals (Bostrom, 2014). They embody augmented cognition, where AI becomes a cognitive partner rather than a competitor.

Systems Thinking as a Core Competency

A defining feature of strategic polymathy is systems thinking, which involves recognizing that complex problems cannot be solved in isolation (Senge, 1990). AI systems themselves are embedded in broader ecosystems of ethics, economics, and culture. Strategic polymaths use systems thinking to anticipate unintended consequences, integrate feedback loops, and design solutions resilient to change.

For instance, an AI-integrated project manager might combine data analytics, behavioral science, and stakeholder communication to improve outcomes in construction or education fields where Dr. Walter Rodriguez and others have shown the power of cross-domain management models (Rodriguez, 2024).

Strategic Implications for Education and Leadership

Education systems, traditionally designed for disciplinary depth, must now cultivate polymathic adaptability. Interdisciplinary curricula, project-based learning, and AI-powered simulations can foster synthesis-oriented mindsets (Schmidt & Cohen, 2023). Leadership models are also evolving: the most effective executives will be those who bridge technology, psychology, and purpose—strategic integrators who think polymathically while leading systemically (Hamel & Zanini, 2020).

Conclusion: Becoming Strategically Polymathic

In the Age of AI, the future belongs neither to the narrow specialist nor to the shallow generalist, but to the Strategic Polymath—a learner-leader who unites curiosity with clarity, depth with breadth, and data with human meaning. As AI continues to redefine work and learning, the ability to connect disciplines and synthesize insight across systems will distinguish those who merely use AI from those who lead with it.

References

Bostrom, N. (2014). Superintelligence: Paths, dangers, strategies. Oxford University Press.

Brynjolfsson, E., & McAfee, A. (2017). Machine, platform, crowd: Harnessing our digital future. W. W. Norton & Company.

Epstein, D. (2019). Range: Why generalists triumph in a specialized world. Riverhead Books.

Hamel, G., & Zanini, M. (2020). Humanocracy: Creating organizations as amazing as the people inside them. Harvard Business Review Press.

Hofstadter, D., & Sander, E. (2013). Surfaces and essences: Analogy as the fuel and fire of thinking. Basic Books.

Huang, M.-H., & Rust, R. T. (2021). Artificial intelligence in service. Journal of Service Research, 24(1), 3–19. https://doi.org/10.1177/1094670520902266

Marcus, G., & Davis, E. (2020). Rebooting AI: Building artificial intelligence we can trust. Vintage.

Page, S. E. (2007). The difference: How the power of diversity creates better groups, firms, schools, and societies. Princeton University Press.

Senge, P. (1990). The fifth discipline: The art and practice of the learning organization. Doubleday.

Snow, C. P. (1959). The two cultures. Cambridge University Press.

Taleb, N. N. (2012). Antifragile: Things that gain from disorder. Random House.

Tegmark, M. (2018). Life 3.0: Being human in the age of artificial intelligence. Vintage.

> Building Cloud Redundancy for Small Businesses: Surviving Outages in an AI, Multi-Cloud World

By Adaptiva Corp and Coursewell Staff

Abstract

Recent disruptions—such as the October 2025 AWS US-EAST-1 outage—exposed the fragility of digital operations dependent on a single cloud provider. Small and medium-sized enterprises (SMEs) increasingly rely on cloud platforms for daily business continuity, yet many lack redundancy strategies to withstand provider-level failures. This paper presents a practical framework for SMEs to achieve cost-effective cloud resilience through redundancy, backup discipline, failover planning, and artificial intelligence (AI)-assisted monitoring. It synthesizes industry best practices and demonstrates how AI-driven analytics can automate outage detection, forecast risks, and orchestrate failover processes. The goal is to help smaller organizations design realistic, multi-layered defenses against downtime, data loss, and service unavailability.

Introduction

Cloud computing has become the backbone of modern business operations. However, dependence on a single provider—most commonly Amazon Web Services (AWS)—creates systemic vulnerability. When AWS US-EAST-1 suffered a regional DNS-related failure on October 20, 2025, thousands of organizations experienced widespread outages across web services, mobile apps, and data pipelines (Engadget, 2025; Reuters, 2025). For small businesses, even a few hours offline can disrupt customer trust, revenue, and reputation.

While large corporations maintain dedicated IT disaster-recovery teams, SMEs often lack such capacity. Their resilience must therefore depend on intelligence and automation rather than scale. Artificial intelligence (AI) now enables predictive analytics and real-time decision-making, allowing small enterprises to detect anomalies early, respond faster, and even automate their continuity operations.

The Need for Cloud Redundancy

Redundancy refers to maintaining backup systems or resources that can take over automatically (or rapidly) in the event of a failure (Liquid Web, 2024). For cloud environments, this includes replicated data centers, secondary providers, or mirrored applications across regions. The objective is to minimize two metrics:

RTO (Recovery Time Objective) — how quickly systems recover.

RPO (Recovery Point Objective) — how much data can be lost before recovery.

While many SMEs depend solely on AWS S3 or EC2, the single-cloud model concentrates risk. Multi-cloud or hybrid models distribute workloads across independent providers—allowing operations to continue when one fails (DigitalOcean, 2023).

AI enhances this by continuously analyzing telemetry, predicting service degradation, and even initiating self-healing workflows before a failure occurs. For example, machine learning models trained on latency, error rates, and API performance can signal when a cloud region is likely to degrade—triggering automated replication or traffic redirection in advance.

A Practical Framework for SMEs

1. Identify Critical Systems

Begin with a risk assessment: Which functions must stay online? Examples include websites, payment systems, learning management systems (LMS), or AI APIs. Document the maximum tolerable downtime (RTO) and acceptable data loss (RPO) for each component (EOXS, 2024). AI-powered risk analysis tools can evaluate historical incident data to prioritize which systems merit investment in redundancy.

2. Apply the “3-2-1” Backup Principle

Maintain three copies of data, stored on two media types, with one off-site. For example, production data might reside in AWS S3, with encrypted replicas in Google Cloud Storage and a long-term archive on Azure Blob Storage (CloudAlly, 2024).

AI tools such as Veeam’s SureBackup or Rubrik’s Radar can automatically verify backup integrity and detect ransomware-infected snapshots before restoration.

3. Adopt Multi-Cloud or Multi-Region Deployment

Distribute critical workloads across regions or providers to minimize dependency. AI-assisted orchestration tools like Terraform Cloud with AI agents, or Kubernetes autoscaling enhanced by predictive ML, can dynamically balance workloads based on utilization, cost, and reliability forecasts (CIO Dive, 2024).

4. Implement Health Checks and Automated Failover

Tools such as Cloudflare Load Balancer or NS1 can perform DNS failover. When augmented by AI anomaly detection—monitoring patterns across latency, response time, and packet loss—failover decisions can be made autonomously, often before a human operator notices the issue (Microsoft Learn, 2024).

5. Test and Validate the Plan

Redundancy without rehearsal is false security. AI-driven chaos engineering platforms, such as Gremlin or AWS Fault Injection Simulator, can automatically simulate outages and measure system resilience. This enables small businesses to “train” their systems for failure recovery, not just plan for it.

6. Manage Cost and Complexity

AI optimization tools analyze billing data, CPU utilization, and data egress patterns to recommend optimal resource allocations (Spot.io, 2024). This ensures redundancy investments remain sustainable.

7. Safeguard Security and Compliance

All data transfers between clouds should use TLS 1.3 encryption and provider-native key management (AWS KMS, Azure Key Vault, GCP KMS). AI-enabled compliance tools can monitor for configuration drift or policy violations across multiple providers in real time.

Conclusion

Cloud redundancy is no longer optional—it is a survival necessity. The October 2025 AWS outage demonstrated that resilience now depends as much on intelligence as on infrastructure. For small businesses, AI provides the missing operational layer—automating monitoring, forecasting, and recovery with minimal human intervention. By combining traditional redundancy with AI-assisted decision support, even a two-person IT team can achieve enterprise-level reliability.

References

CloudAlly. (2024). Cloud backup best practices. https://www.cloudally.com/blog/cloud-backup-best-practices/

CIO Dive. (2024). AWS outage highlights need for cloud interoperability. https://www.ciodive.com/news/aws-outage-cloud-recovery-interoperability/589844/

DigitalOcean. (2023). Multi-cloud strategy for startups and SMBs. https://www.digitalocean.com/resources/articles/multi-cloud-strategy

EOXS. (2024). Best practices for data redundancy and disaster recovery planning. https://eoxs.com/new_blog/best-practices-for-data-redundancy-and-disaster-recovery-planning

Engadget. (2025, October 20). Major AWS outage knocks Fortnite, Alexa, and Venmo offline. https://www.engadget.com/big-tech/amazons-aws-outage-has-knocked-services-like-alexa-snapchat-fortnite-venmo-and-more-offline

Liquid Web. (2024). Understanding redundancy in cloud computing. https://www.liquidweb.com/blog/redundancy-in-cloud-computing

Microsoft Learn. (2024). Designing for reliability and redundancy. https://learn.microsoft.com/en-us/azure/well-architected/reliability/redundancy

Reuters. (2025, October 20). Amazon says AWS service back to normal after outage. https://www.reuters.com/business/retail-consumer/amazons-cloud-unit-reports-outage-several-websites-down

Spot.io. (2024). Cloud optimization: four key factors. https://spot.io/resources/cloud-optimization/cloud-optimization-the-4-things-you-must-optimize

APPENDIX

ChatGPT 5.0 (or other advanced AI models) can provide a multi-cloud redundancy architecture that your IT team may use to complement (not replace) AWS. It’s designed for active-active stateless services, fast DNS failover, and clear data-layer options for different RPO/RTO needs.

High-Level Flow (Active-Active)

flowchart LR

U[Users] --> CF[Cloudflare DNS + Global LB<br/>Health checks, geo-steering, session affinity]

CF --> AWSFE[AWS edge (CloudFront/ALB)]

CF --> AZFE[Azure edge (Front Door/App GW)]

CF --> GCPFE[GCP edge (Global LB)]

AWSFE --> AWSEKS[EKS / Fargate<br/>Stateless APIs + web]

AZFE --> AZAKS[AKS<br/>Stateless APIs + web]

GCPFE --> GKE[GKE<br/>Stateless APIs + web]

subgraph Shared Services

RDS[(Data Layer Options)]:::data

REDIS[(Redis Enterprise Active-Active<br/>or Valkey cluster w/ CRDTs)]:::data

OBJ[Object Storage Mesh<br/>S3 ⇄ GCS ⇄ Azure Blob (via R2/Tiered Cache)]:::data

VAULT[HashiCorp Vault (DR Secondary)]:::ctrl

CI[GitHub Actions + Argo CD + Terraform/Crossplane]:::ctrl

OBS[Datadog / Grafana Cloud / Loki]:::ctrl

end

AWSEKS --> REDIS

AZAKS --> REDIS

GKE --> REDIS

AWSEKS --> RDS

AZAKS --> RDS

GKE --> RDS

AWSEKS --> OBJ

AZAKS --> OBJ

GKE --> OBJ

classDef data fill:#eef,stroke:#55f;

classDef ctrl fill:#efe,stroke:#5a5;

What runs where

Edge/DNS & Failover

Cloudflare Load Balancer + health checks + geo-/latency-based steering, with automatic failover if any region/cloud is unhealthy.

Optional: “Brownout” mode (reduce traffic to a degraded cloud without fully failing it).

Compute (stateless)

AWS EKS, Azure AKS, GCP GKE all running the same container images.

Use Argo CD (per cluster) for GitOps sync; Terraform + Crossplane to keep infra definitions portable.

Sessions & Caches

Redis Enterprise Active-Active (CRDT) (managed, multi-cloud) for durable session/state, queues, and rate-limits—so users can bounce between clouds without losing sessions.

Data Layer (pick one pattern below)

Good, simple DR (warm standby)

Primary PostgreSQL on AWS (RDS/Aurora).

Logical replication to Azure (Flexible Server) and GCP (Cloud SQL).

RPO ≈ minutes; RTO ≈ 15–30 min (automated promotion & DNS cutover).

Strong HA across clouds (near-zero RPO)

CockroachDB Dedicated or YugabyteDB Managed deployed across AWS+Azure+GCP regions.

True multi-primary, zone-tolerant. Higher cost/complexity, best resilience.

Event-sourced core

Kafka (Confluent Cloud, multi-region) + compacted topics as source of truth.

Downstream Postgres replicas in each cloud for reads; rebuild on failover from the log.

Object Storage

Keep S3 the “gold” bucket but sync to GCS and Azure Blob (scheduled Rclone; or use Cloudflare R2 with Tiered Cache to front them all).

Serve public assets via Cloudflare CDN regardless of origin.

Secrets & Keys

Vault primary in AWS, DR secondary in Azure; agents on each cluster.

Cloud-native KMS (KMS/Key Vault/Cloud KMS) for envelope encryption per cloud.

Observability

Datadog (or Grafana Cloud) as a single pane of glass: uptime checks from multiple regions, log/trace/metric correlation across clouds.

Failover logic (practical)

Health checks: Cloudflare probes

/healthzon each cloud’s edge/ingress.Route steering: If AWS US-EAST-1 degrades, traffic shifts to Azure/GCP automatically.

State continuity: Sessions live in Redis A-A; users continue seamlessly after re-route.

Data writes:

Pattern 1: App flips to Azure/GCP DB only after promotion (short write freeze).

Pattern 2: Multi-primary DB continues without interruption.

Storage: Static/media keep serving (Cloudflare cache + multi-origin).

Rollback: When AWS recovers, traffic gradially rebalanced (canary % ramp).

CI/CD & Configuration

Build once, run everywhere: GitHub Actions builds image → pushes to GHCR/ECR/ACR/GCR.

Argo CD per cluster watches the same manifests/Helm charts (env overlays).

Infra as code: Terraform modules for each cloud; Crossplane for dynamic app-level resources (DBs, buckets) with the same API.

RPO/RTO cheat sheet

PatternRPORTOComplexityNotesLogical replication (warm standby)minutes15–30 minLow-MedEasiest path from current AWS setupMulti-primary DB (CRDB/YB)~0~5 minHighBest for write-heavy, global appsEvent-sourced core~0~10–20 minMed-HighGreat auditability & rebuilds

Security & Compliance quick wins

Federate identities via Entra ID + AWS IAM Identity Center + Google IAM (SAML/OIDC).

Per-cloud network policies, mTLS between services, and WAF at Cloudflare + cloud-native WAFs.

Encrypt in transit (TLS 1.3) and at rest (KMS/Key Vault/Cloud KMS).

Centralized audit trails in Datadog/Grafana with immutable archives in object storage.

> AI-Resilient Careers: How to Future-Proof Your Career in the Age of Intelligent Machines

By Walter Rodriguez, PhD, PE

FUTURE PROOF YOUR CAREER: In an era when generative AI, agentic AI, Physical AI, automation, and rapid technological change are rewriting the rules of work, many people face understandable anxiety about career stability. The good news is that while AI will transform many jobs, some professions are better suited to survive and even thrive. For anyone exploring new careers—students, career-changers, lifelong learners—understanding these resilient pathways is essential.

The Landscape: Disruption & Opportunity

AI and automation are not just hypotheticals. The Future of Jobs Report 2025 from the World Economic Forum projects that 92 million roles globally could be displaced by 2030, though 170 million new jobs may emerge, yielding a net gain of around 78 million positions. (World Economic Forum, 2025) World Economic Forum Reports+1

McKinsey’s analysis reinforces this duality: AI is capable of automating tasks that currently consume up to 70 percent of employees’ time in many jobs. Yet the same report estimates that 75 million to 375 million workers may need to switch occupations or retrain by 2030 under more aggressive automation scenarios. (McKinsey, 2017) McKinsey & Company

Thus, the future of work presents both risk and possibility. The key for students and career-seekers is choosing paths with high resilience to disruption and high upside for growth.

What Makes a Career “AI-Resilient”?

Jobs that are more likely to endure tend to share certain features. The more of these a career embodies, the more future-proof it may be:

Human + relational components

Empathy, emotional intelligence, coaching, negotiation, mentorship, and interpersonal trust are hard to automate.Creative and strategic thinking

AI is good at pattern recognition, but less able to originate novel ideas, vision, or strategy from scratch.Expert oversight and interdisciplinary judgment

Technology needs human governance—interpreting outputs, resolving ambiguity, ensuring ethics, applying domain wisdom.Integration with emerging tech

Careers that work with AI (not simply independent of it) are often safer. The ability to collaborate with intelligent systems is a strength, not a threat.Adaptability and lifelong learning

The faster the pace of change, the more important it is to keep evolving.Work in physical, unpredictable, or care-centric settings

Jobs that require hands, bodies, presence, or caring relationships are tougher to replace.

A recent working paper, Complement or substitute? How AI increases the demand for human skills, finds that AI tends to complement human skills (raising demand) more than substitute them. Skills like digital literacy, resilience, interpersonal collaboration, and judgment are increasingly rewarded. arXiv

Careers with Strong Prospects

Below is a curated list of professions that show strong signs of resilience and growth in an AI-inflected future:

Field > Profession > Why It’s Resilient > Key Skills to Cultivate

Healthcare & Human Services (nurses, therapists, geriatric care, rehabilitation) > Aging populations and human care demands grow; AI may assist diagnostics, but human caregivers remain essential. > Empathy, clinical judgment, patient communication, human-AI partnership

Education & Learning Design > Teaching involves mentorship, motivation, social context, and customization beyond algorithmic tutoring. > Instructional design, pedagogical theory, edtech fluency, emotional attunement

Skilled Trades & Technical Maintenance (electricians, HVAC, robotics maintenance, repair) > Physical environments are messy and unpredictable; automation costs are high in many real-world settings. > Diagnostic thinking, hands-on skill, safety, continuous technical upgrading

Technology & AI-Adjoint Roles (data science, AI ethics, machine learning engineering, cybersecurity) > As AI spreads, people will be needed to build, oversee, secure, and interpret systems. > Algorithmic thinking, ethics, security, domain cross-knowledge

Creative & Strategic Arts (design, content strategy, branding, media direction) > Creative vision, narrative, user experience, and brand identity are strongly human-led. > Storytelling, design thinking, cultural literacy, AI-augmented creativity

Business Leadership, Consulting & Organizational Strategy > Complex decisions, change management, stakeholder dynamics, and ethical judgment remain human domains. > Systems thinking, organizational psychology, diplomacy, integrative judgment

Green Jobs & Sustainability (renewable energy, climate adaptation, ecological planning) > The transition to sustainable infrastructure will generate massive new demand. > Environmental science, project management, interdisciplinary engineering, regulatory fluency

In the Future of Jobs Report 2025, jobs like software developers, construction workers, shop salespersons, and delivery drivers appear in the top growing occupations globally. (World Economic Forum, 2025) World Economic Forum

In the U.S., McKinsey sees AI augmenting rather than replacing knowledge work in STEM, business, legal, and creative roles, while accelerating the decline in office support, administrative, and food service roles. (McKinsey, Generative AI and the Future of Work in America) McKinsey & Company

Meanwhile, bibliometric research predicts that by 2029, the U.S. might lose over 1 million jobs in office and administrative support roles due to AI substitution of repetitive tasks. (Pennathur et al., 2024) arXiv

What Students Should Do Now: Strategies to Thrive

Here are actionable steps students and career-seekers can take to increase their resilience and readiness for the AI era:

Embrace hybrid skills

Don’t choose just technical or just human skills; aim for T-shaped profiles (deep in one area + broad in others). For example, a clinician who knows data analysis, or a designer who understands AI prompts.Prioritize AI fluency & tool literacy

Even in non-technical careers, being able to work with AI (prompting, interpreting outputs, verifying results) will be a major competitive advantage.Seek experiential learning and cross-disciplinary projects

Internships, maker labs, real-world capstones teach adaptation, ambiguity, and human-tech collaboration.Build a portfolio, not just credentials

Show what you can do — projects, case studies, creative work, prototypes — more than relying solely on degrees.Practice lifelong learning and resilience

Adopt a growth mindset; set aside time for ongoing upskilling (e.g., microcredentials, bootcamps, MOOCs).Network across fields and stay informed on emerging trends

Many “new jobs” will come at intersections—e.g., climate + AI, healthcare + robotics, education + XR.Focus on value creation and uniqueness

Even in saturated fields, one can specialize (e.g. elder care technology, climate adaptation consulting, neuro-informed education).

A Balanced Vision: Not Doom, But Transition

It is tempting to view AI purely as a threat—but history suggests otherwise. Technological revolutions—from mechanization to the digital era—have destroyed some tasks, but created new ones and raised productivity overall. (McKinsey, Jobs Lost, Jobs Gained) McKinsey & Company

Nonetheless, the speed and scope of change demand more proactive navigation this time around. Understanding which trajectories are most robust, investing in complementary skills, and staying adaptable will determine who thrives.

By guiding students toward professions that integrate human strengths with technological fluency, you help them not only survive but flourish in the age of intelligent machines.

References

McKinsey. (2017). Jobs Lost, Jobs Gained: What the Future of Work Will Mean for Jobs, Skills, and Wages.

McKinsey. Generative AI and the Future of Work in America.

Pennathur, P., Boksa, V., Pennathur, A., Kusiak, A., & Livingston, B. (2024). The Future of Office and Administrative Support Occupations in the Era of Artificial Intelligence: A Bibliometric Analysis.

World Economic Forum. (2025). Future of Jobs Report 2025.

> How AI-Ready Are You? (Reflection and Self-Assessment)

By Walter Rodriguez, PhD, PE

Grab a pen, or take a quiet moment to reflect on the following questions.

These reflection points will help you gauge where you stand in terms of AI preparedness and where you might need to focus or learn.

There are no right or wrong answers – this is for you:

· Do you know how AI is being used in your current job or industry? (For example, are you aware of any AI-driven tools or processes in your daily workflow or that your company uses? If not, can you find out?)

· When was the last time you learned a new digital skill or tool? (Have you tried out any AI-based tools like a chatbot, a voice assistant, or an analytics program in the past year? Staying curious and hands-on is key to building confidence.)

· Which parts of your work are repetitive or data-heavy, and could you imagine automating them? (List 1–3 tasks you do often that are tedious. These might be areas where AI could help – or might eventually handle. How would you feel about that, and what would you do with the time saved?)

· Are you actively upskilling or reskilling for the future? (This could be formal, like taking an online course on data analysis or UX design, or informal, like watching YouTube tutorials on a new app. If you haven’t in a while, identify one skill that interests you and aligns with future trends.)

· How strong are your “human” skills – the ones AI can’t easily replicate? (Think about skills like creativity, critical thinking, empathy, teamwork, and leadership. Give yourself an honest score or assessment. These are the areas to lean into and highlight in your career as automation grows.)

· Have you considered starting a side project or hustle using your skills (with a little help from AI)? (Not everyone wants a side hustle, which is fine. But if you do, what passion or skill could you monetize, and can AI tools make it easier to start? This could range from freelancing on the side to building a personal brand to consulting gigs. Jot down a couple of ideas, no matter how small.)

Take a look at your responses. They will give you a sense of your AI readiness. You might realize, for example, that you haven’t been keeping up with tech changes in your field – that’s okay, now’s the time to start. Or you might see that you’re doing well in adaptability but could improve in technical know-how (or vice versa). This self-assessment isn’t about inducing worry; it’s about shining a light on where you stand so you can make a plan.

No matter where you are right now, remember that the fact you’re even thinking about these questions is a huge step. Most people are still in “wait and see” mode. But you’re here, getting informed and proactive. That alone sets you apart as someone ready to take control of your career in the age of AI. As we move forward on this path, keep these reflection points in mind. In this blog, we’ll address many of them in detail – from how to learn new AI tools, to boosting those all-important human skills, to finding your niche in an AI-driven economy.

At Coursewell, we delve deeper into building your AI toolkit, explore success stories of people who transitioned roles or started businesses thanks to AI and provide checklists for specific actions (like updating your resume for the AI age, networking in a digital world, and more). By the end of this journey, you’ll not only speak the language of AI, without the jargon, but you’ll have the confidence and plan to ensure your career not only survives but flourishes in this exciting new era.

We will dive deeper each time, so get started.

Remember: AI is a tool, and you are the human driving it. With the right mindset and knowledge, the future is yours to shape. Let’s get started on making you the AI-savvy professional that the future needs.

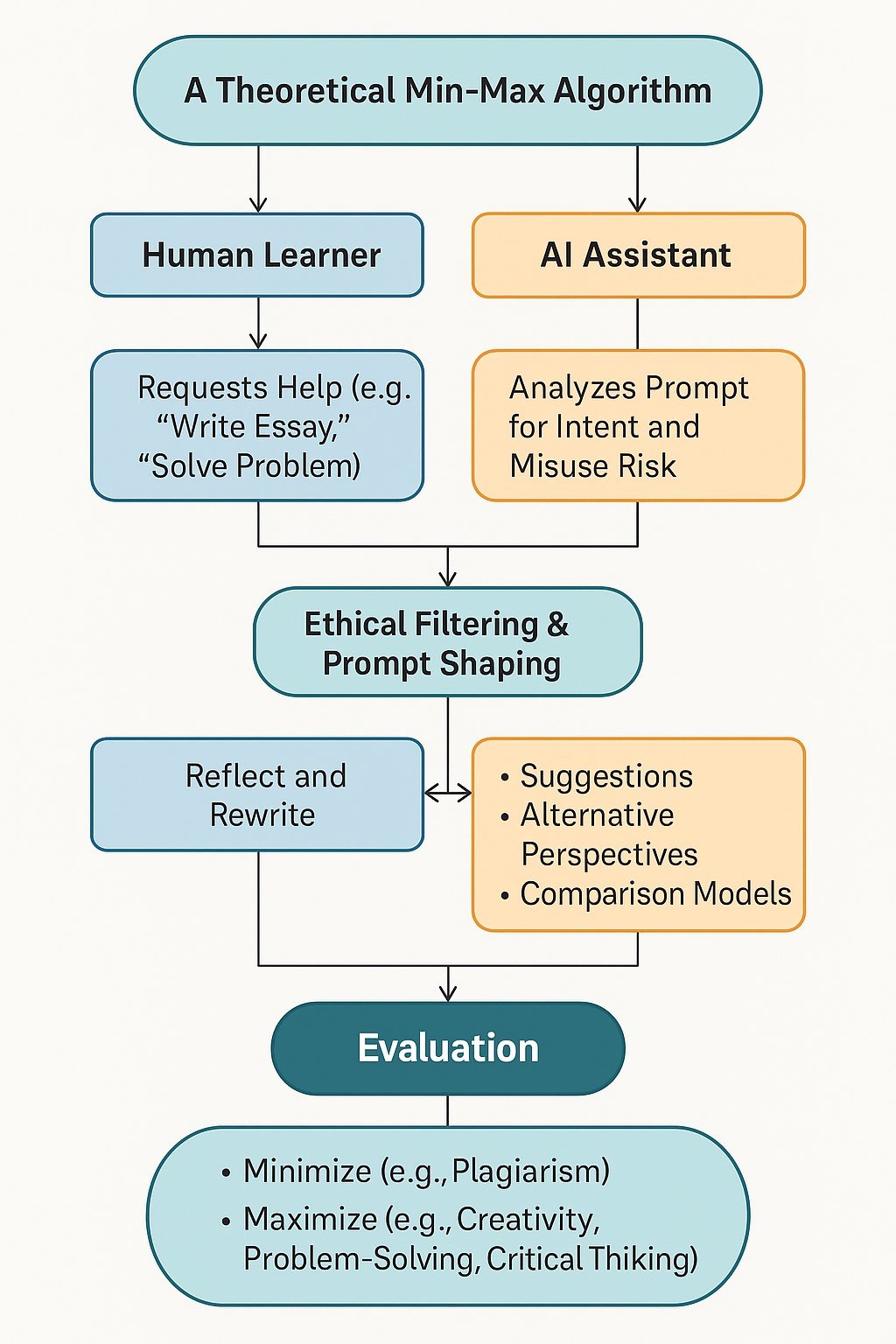

> Integrating Artificial Intelligence into Learning Management Systems: Opportunities, Ethical Dilemmas, and Institutional Responsibilities

By Walter Rodriguez, PhD, PE

Abstract

Higher education institutions are increasingly integrating Artificial Intelligence (AI) into Learning Management Systems (LMSs), such as Canvas and Moodle. These integrations promise to transform instructional delivery, student support, and administrative efficiency. This paper critically analyzes the pedagogical benefits and ethical risks associated with AI-enhanced LMS environments. AI tools—ranging from personalized learning pathways and intelligent tutoring systems to automated grading and data-driven analytics—have demonstrated their capacity to enhance engagement, efficiency, and educational outcomes. However, their adoption introduces pressing ethical issues, including data privacy, algorithmic bias, surveillance, and diminished academic autonomy. This paper reviews current AI implementations across LMS platforms, evaluates their educational impact, and assesses institutional challenges, particularly in values-based contexts like Ave Maria University. By examining emerging governance strategies, ethical frameworks, and human-centered approaches, this paper offers recommendations for the responsible integration of AI. Ultimately, institutions must balance innovation and oversight to ensure AI augments—rather than undermines—the pedagogical mission and ethical integrity of higher education.

“Responsible AI integration requires more than innovation—it demands wisdom”

Introduction

Learning Management Systems (LMS) have become an essential infrastructure in higher education, supporting online, hybrid, and face-to-face instruction. Platforms such as Canvas, Moodle, Blackboard Learn, and D2L Brightspace now host the majority of course content, assessments, and student-faculty interactions across colleges and universities. As these systems evolve, institutions increasingly integrate Artificial Intelligence (AI) to enhance functionality, support personalized learning, and streamline instructional and administrative tasks.

AI integration in LMS platforms reflects a broader shift toward data-driven, adaptive, and scalable education. Leading vendors now offer features such as real-time feedback, intelligent tutoring, automated content generation, and predictive analytics. For instance, Canvas integrates with tools like Khanmigo for AI-assisted lesson planning, while Moodle 4.5 allows seamless access to AI services for content creation and translation. These innovations promise to reduce faculty workload, improve learner engagement, and support data-informed decision-making.

At the same time, educators and administrators face growing concerns about AI’s ethical and social implications. Stakeholders question how LMS vendors collect and use student data, how AI systems may reinforce existing biases, and whether AI-generated outputs undermine academic integrity or reduce opportunities for authentic learning. Institutions with strong values-based missions—such as Ave Maria University, a Catholic liberal arts college—must grapple with whether AI aligns with or threatens their core educational principles. For example, Ave Maria explicitly prohibits unauthorized AI use in academic work while recognizing its potential instructional value if properly cited and guided.

This paper critically analyzes the integration of AI into LMS platforms, focusing on both educational benefits and ethical dilemmas. It examines how AI enhances teaching and learning through personalization, automation, and engagement. AI complicates longstanding ethical norms around data privacy, algorithmic fairness, academic honesty, and human oversight. Drawing on examples from Canvas, Moodle, and other platforms, and situating the analysis in institutional contexts like Ave Maria, we identify practical strategies to maximize benefits while minimizing harm. Ultimately, we argue that ethical and effective AI adoption in LMS requires governance frameworks, transparency, and continuous faculty development, not just technological enthusiasm.

Background: AI in Learning Management Systems

Artificial Intelligence (AI) has rapidly become a defining feature of next-generation Learning Management Systems (LMS). Developers have integrated AI into these platforms to automate instructional tasks, personalize learning experiences, and analyze student performance data. While early LMS designs focused on content delivery and administrative tracking, today’s systems incorporate increasingly sophisticated AI tools that redefine how educators and students interact within digital environments.

LMS platforms such as Canvas, Blackboard Learn, D2L Brightspace, and Moodle now offer AI-enhanced features for course design, real-time feedback, predictive analytics, and multilingual access. These tools rely on machine learning algorithms, natural language processing (NLP), and generative AI models to support faculty and improve student learning outcomes.

Each LMS provider has introduced distinctive AI capabilities that illustrate the rapid evolution of digital teaching environments. Canvas integrates AI tools that generate discussion summaries, translate content in real time, and suggest instructional resources. Blackboard’s AI Design Assistant automates course scaffolding and grading. Brightspace’s Lumi engine creates aligned assessments. Moodle’s open-source architecture allows institutions to integrate third-party AI models while emphasizing transparency and equity.

AI also transforms instruction by providing adaptive content, real-time feedback, and early warning systems for disengaged students. Studies show these systems improve engagement, retention, and instructor efficiency. However, their adoption raises complex questions around privacy, fairness, and academic autonomy—topics explored in the next sections.

Benefits of AI Integration in LMS (Pros)

Artificial Intelligence (AI) offers powerful enhancements to Learning Management Systems (LMS) by improving personalization, streamlining assessment, increasing engagement, and enabling data-informed decision-making. This section examines how AI improves learning environments for students, supports instructors, and enhances administrative efficiency.

Personalized and Adaptive Learning

AI tools tailor instruction based on student performance, preferences, and behavior. Systems such as Brightspace adjust content complexity in real time, while Moodle agents recommend adaptive practice and gamified exercises to sustain motivation. Canvas’s NLP features support multilingual learners by translating content and summarizing discussions. These capabilities promote inclusion, particularly for non-native speakers and students with diverse learning needs.

Efficient Assessment and Feedback

AI enables automated grading, personalized feedback, and scalable evaluation. Blackboard’s AI Design Assistant and Brightspace’s Lumi engine generate quiz questions aligned with learning outcomes. AI tools provide instant feedback on writing and problem-solving tasks, allowing students to iterate and instructors to manage large cohorts efficiently.

Increased Student Engagement and Support

AI bots and tutors enhance engagement by answering questions instantly and prompting action. Canvas provides generative summaries that keep discussion forums accessible, while Moodle uses adaptive gamification to motivate learners. Predictive dashboards in Blackboard and Brightspace alert faculty to at-risk students, enabling proactive outreach and improved retention.

Administrative Efficiency and Strategic Planning

AI-powered dashboards support institutional decision-making by identifying patterns in course performance, engagement, and resource use. Automation tools reduce administrative workload and ensure compliance with academic policies. Vendors like Instructure and Anthology enable institutions to configure AI settings to reflect local governance, privacy standards, and pedagogical priorities.

Together, these benefits demonstrate that AI, when used thoughtfully, enhances instructional effectiveness, student outcomes, and institutional capacity.

Ethical Issues and Challenges (Cons) of AI in LMS

Despite its promise, AI in LMS introduces critical ethical and operational risks that institutions must confront.

Data Privacy and Security

AI tools often require detailed student data to function. When institutions transmit this data to external AI services, they may violate FERPA or GDPR. LMS providers like Canvas now offer transparency tools and administrator controls, but many third-party tools lack sufficient safeguards. Without clear policies, AI integration risks creating a surveillance environment that undermines trust.

Algorithmic Bias and Equity

AI systems can reflect and reinforce biases present in their training data. Plagiarism detectors and essay evaluators sometimes misidentify writing by non-native English speakers or students from underrepresented groups as problematic. These false positives can result in academic penalties and systemic inequities unless institutions actively audit and refine AI tools.

Lack of Transparency and Accountability

Many AI algorithms operate as “black boxes.” When students receive feedback or grades without understanding the basis, they may question the legitimacy. Instructors must be able to explain and, if needed, override AI-generated outputs. Without clear accountability protocols, institutions risk eroding pedagogical authority and legal clarity.

Academic Integrity Risks

Students now use AI tools to generate essays, solve problems, or paraphrase content. While some institutions allow regulated use with citation (e.g., Ave Maria University), others struggle to define boundaries. Detection tools remain unreliable, often penalizing innocent students. A better approach emphasizes thoughtful assignment design and open AI-use policies grounded in academic honesty.

Changing Roles of Educators and Students

AI can shift faculty from content creators to curators and moderators. While this can elevate pedagogy, it may also marginalize instructors if institutions adopt AI as a cost-saving substitute. Students must also learn how to use AI tools ethically, avoiding overreliance and developing critical thinking skills. Faculty development and digital citizenship education are essential.

These challenges demand structured governance, faculty training, and clear communication strategies to ensure AI supports—rather than undermines—educational values.

Conclusion and Recommendations

As Artificial Intelligence (AI) continues to shape the future of digital education, institutions face both unprecedented opportunities and pressing ethical responsibilities. Integrating AI into Learning Management Systems (LMS) such as Canvas and Moodle can dramatically enhance personalization, automate repetitive tasks, improve student engagement, and inform data-driven decision-making. However, these benefits come with ethical trade-offs, ranging from data privacy violations and algorithmic bias to transparency failures and challenges to academic integrity.

This paper critically analyzed both the advantages and ethical risks of AI-enhanced LMS platforms, especially in values-based institutional contexts such as Ave Maria University. By exploring AI features across major LMS platforms, reviewing recent research, and examining real-world policy responses, we demonstrated that successful AI integration depends not only on technological functionality but also on governance, transparency, and community trust.

Institutions must adopt a human-centered approach to AI—one that views technology as a tool to augment, not replace, the educational mission. Faculty must retain autonomy over instructional content, student support, and assessment design. Students must engage critically with AI tools, understanding their potential and their limits. Administrators must ensure that AI implementations reflect ethical principles, comply with laws, and support equity and inclusion.

Key Recommendations

Adopt Institutional AI Frameworks

Define clear ethical principles—such as transparency, equity, privacy, and accountability—and align AI policies with these values. Use existing models (e.g., Moodle AI Principles, Instructure’s guidelines) as starting points.Establish Robust Governance

Form AI ethics or oversight committees responsible for evaluating LMS-integrated tools, auditing algorithms, and updating institutional policies. Require faculty review before deploying AI-generated content.Strengthen AI Literacy

Provide professional development for faculty and orientation modules for students. Teach users to critically evaluate AI outputs, use tools ethically, and adapt instruction and assessment accordingly.Ensure Human Oversight

Keep humans “in the loop.” Require human approval for high-stakes AI decisions (e.g., grades, plagiarism flags, risk alerts). Offer appeal processes and require AI usage disclosure in syllabi.Foster Transparent Communication

Inform users when AI is active. Explain what data AI systems use and how results are generated. Require documentation or confidence indicators for AI-driven analytics.Promote Continuous Evaluation

Regularly assess the educational and ethical impact of AI tools. Use institutional research, surveys, and classroom evidence to improve practices. Encourage partnerships with vendors and peer institutions to share findings.

Final Reflection

Ultimately, AI is not neutral. It reflects the values, intentions, and assumptions of those who design, implement, and oversee it. In education—where relationships, trust, and transformation matter deeply—institutions must treat AI not simply as a technical add-on but as a cultural and ethical intervention.

By proceeding with intention, transparency, and empathy, institutions can ensure that AI enhances—not erodes—learning. As instructional designers, faculty leaders, and educational technologists, we must not only ask, “What can AI do for education?” but also, “What should we allow AI to do in our classrooms, communities, and culture?”

References

AlAli, N. M., & Wardat, Y. A. (2024). Artificial intelligence in education: Opportunities and ethical challenges. Journal of Educational Technology Research, 19(2), 233–248. https://doi.org/10.1016/j.jetr.2024.02.003