> Avoid ‘AI Lazy’ Syndrome: Min-Max Algorithm for Human-AI Collaboration

Combating “AI Lazy” Syndrome: Strategies for Minimizing Plagiarism and Enhancing Cognitive Engagement in Higher Education

Walter Rodriguez, PhD, PE

Abstract

The integration of artificial intelligence (AI) in academic environments presents both unprecedented opportunities and serious challenges. Among these is the rise of the “AI lazy” syndrome, where students and even faculty overly depend on AI-generated content, risking academic dishonesty and intellectual stagnation. This paper examines what AI fatigue syndrome entails, its causes, and strategies for mitigating it among educators and learners. Through pedagogical strategies, ethical training, and critical engagement with AI tools, higher education can maintain academic integrity while maximizing learning, creativity, and higher-order thinking. The Appendix includes an AI-Human collaborative algorithm that minimizes risks (e.g., plagiarism, overreliance) and maximizes gains (e.g., creativity, problem-solving, critical thinking) in educational settings.

Introduction

The rise of generative artificial intelligence, including tools such as ChatGPT, has significantly transformed the educational landscape. While AI can foster creativity, support learning, and enhance accessibility, it also introduces risks such as plagiarism and intellectual complacency. Increasingly, students may submit AI-generated content as their own without proper citation, and faculty may rely excessively on AI for content delivery or assessment (Cotton et al., 2023). This phenomenon, colloquially termed “AI lazy” syndrome, threatens the development of critical thinking and original thought. This article provides a comprehensive framework for minimizing plagiarism and AI misuse while maximizing educational outcomes.

What Is “AI Lazy” Syndrome and Why Does It Matter?

“AI lazy” syndrome refers to the uncritical reliance on AI tools to complete academic tasks without meaningful human input. Students may use AI to draft essays, solve problems, or answer discussion posts without synthesizing information themselves. Similarly, educators may use AI to generate assignments or grade work with minimal oversight. This behavior undermines academic integrity and stifles essential skills such as analysis, synthesis, and creativity (Zhai, 2022).

Unchecked, this syndrome can normalize academic dishonesty and weaken the learner’s capacity for independent thought (OpenAI, 2023). Furthermore, reliance on AI without understanding its limitations, such as hallucinated facts or biased outputs, can perpetuate misinformation and reduce the quality of learning outcomes (Kasneci et al., 2023).

When and Where Does It Happen?

AI misuse tends to occur:

When students face time constraints, lack confidence, or encounter difficult topics.

Where academic policies are unclear, enforcement is lax, or institutional guidance on AI is absent.

Common contexts include online learning environments, take-home exams, or asynchronous assignments where surveillance is minimal and AI tools are easily accessible (Smutny & Schreiberová, 2020). Moreover, institutions without formal policies or honor codes related to AI use leave room for ambiguity and misuse.

How to Minimize Plagiarism and Avoid AI Lazy Syndrome

1. Promote Ethical AI Literacy

Educators must explicitly teach students how to use AI responsibly. This includes understanding when AI is acceptable, how to cite it, and recognizing its limitations. The Modern Language Association (MLA) and American Psychological Association (APA) have both provided initial guidelines for citing AI-generated content (APA, 2023). Ethical awareness must be integrated into curricula across disciplines.

2. Design Authentic and Reflective Assessments

Assignments that require personal reflection, iterative feedback, or real-world application are more difficult for AI to replicate meaningfully (Bali et al., 2023). For example, a business ethics course might ask students to reflect on a local ethical dilemma they encountered, grounding responses in lived experience.

3. Use AI as a Scaffold, Not a Substitute

Faculty can model appropriate AI usage by encouraging students to use AI tools during brainstorming or early drafting stages, but requiring original synthesis and critical evaluation. Structured assignments can include prompts like:

Use AI to generate three possible solutions to a problem, then critique each one.

Compare your answer to the AI’s and identify where it falls short.

This approach engages critical thinking and supports metacognition (Webb et al., 2023).

4. Reinforce Honor Codes and Academic Integrity Policies

Universities should update and communicate academic integrity policies to reflect new realities of AI use. Policies should clarify what constitutes unauthorized AI use, while also empowering students to use AI ethically. Establishing clear consequences and consistent enforcement deters misconduct (Fishman, 2022).

5. Encourage Collaborative Problem-Solving

Group projects that involve shared responsibilities, peer review, and discussion encourage accountability and deeper learning. When students must explain concepts to peers, they are more likely to engage cognitively with material (Vygotsky, 1978).

Maximizing Creativity, Problem Solving, and Critical Thinking

AI can augment rather than hinder intellectual development when integrated thoughtfully. For instance:

Creativity can be sparked by using AI to explore unfamiliar genres or perspectives, followed by human refinement.

Problem-solving can be deepened by analyzing flawed AI solutions and correcting them.

Critical thinking can be fostered through comparative analysis of AI versus human reasoning.

The key is to maintain human agency and judgment in all phases of learning (Dwivedi et al., 2023).

Conclusion

While the emergence of AI tools in education offers significant benefits, the risks of overreliance—“AI lazy” syndrome—and plagiarism must be addressed through intentional design, ethical instruction, and engaged pedagogy. Institutions that proactively build AI literacy, revise assessment strategies, and reinforce academic integrity will be better positioned to prepare students not only to use AI but to think beyond it. Higher education must lead not in resisting AI, but in mastering it responsibly.

References

American Psychological Association. (2023). How to cite ChatGPT. https://apastyle.apa.org/blog/how-to-cite-chatgpt

Bali, M., Cronin, C., & Hodges, C. (2023). Ethics of care and academic integrity in the age of AI. Teaching in Higher Education, 28(4), 489–505. https://doi.org/10.1080/13562517.2023.2176836

Cotton, D. R., Cotton, P. A., & Shipway, J. R. (2023). Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International, 60(2), 241–252. https://doi.org/10.1080/14703297.2023.2190148

Dwivedi, Y. K., Hughes, D. L., Baabdullah, A. M., Ribeiro-Navarrete, S., & Symonds, C. (2023). Artificial intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice, and policy. International Journal of Information Management, 70, 102642. https://doi.org/10.1016/j.ijinfomgt.2023.102642

Fishman, T. (2022). Academic integrity in the age of artificial intelligence. International Center for Academic Integrity.

Kasneci, E., Sessler, K., & Bannert, M. (2023). ChatGPT and education: Opportunities and challenges. Computers and Education: Artificial Intelligence, 4, 100234. https://doi.org/10.1016/j.caeai.2023.100234

OpenAI. (2023). ChatGPT usage policies. https://openai.com/policies/usage-policies

Smutny, P., & Schreiberová, P. (2020). Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Computers & Education, 151, 103862. https://doi.org/10.1016/j.compedu.2020.103862

Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Harvard University Press.

Webb, M. E., Ifenthaler, D., & Gunawardena, C. N. (2023). Generative AI and learning: A metacognitive framework. Educational Technology Research and Development, 71(2), 567–587. https://doi.org/10.1007/s11423-023-10103-w

Zhai, X. (2022). Academic integrity in the age of AI: A call for reflection and action. AI & Society, 38(1), 1–6. https://doi.org/10.1007/s00146-022-01394-0

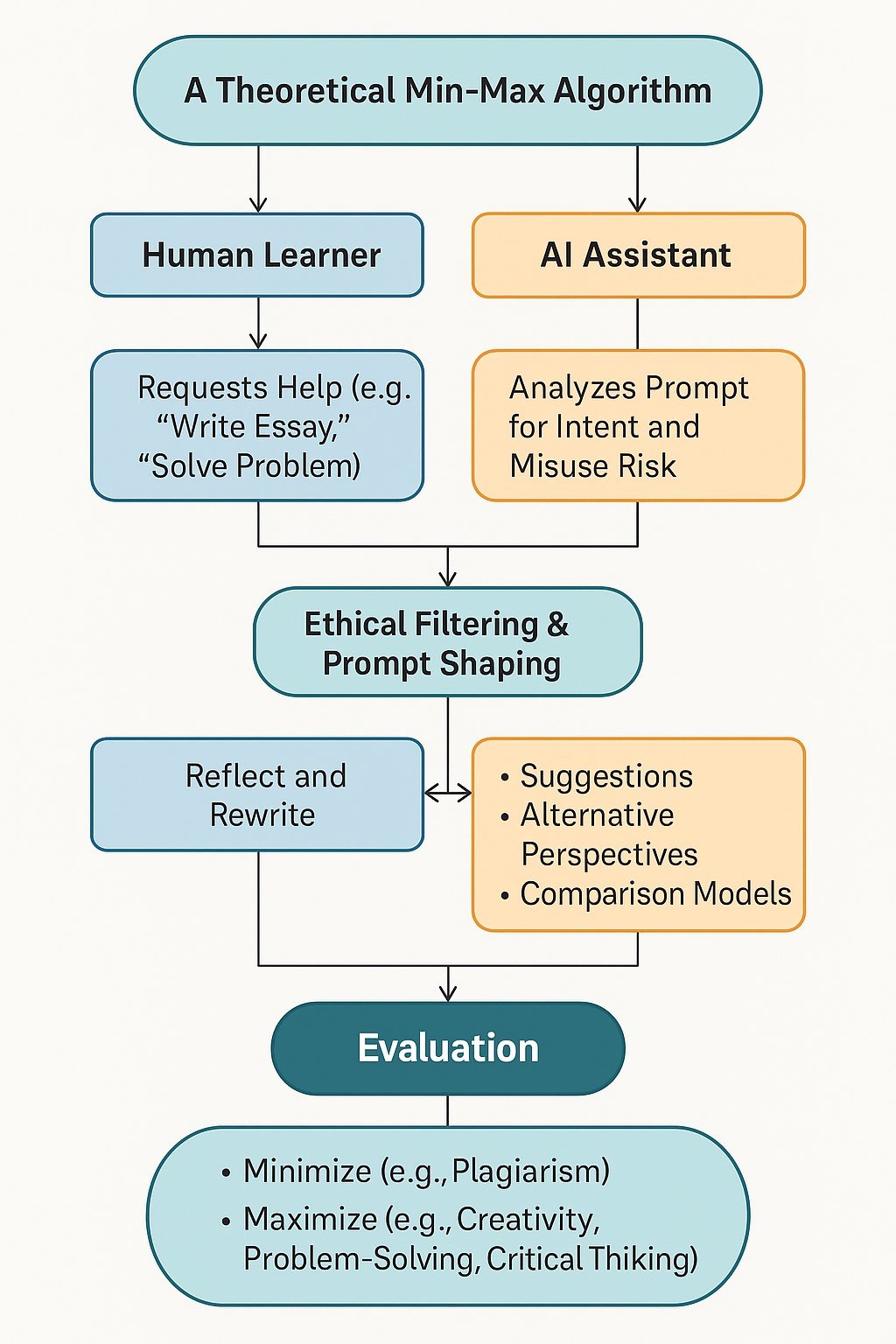

A Theoretical Min-Max Algorithm for Human-AI Collaboration in Learning Environments

Appendix: A Theoretical Min-Max Algorithm for Human-AI Collaboration in Learning Environments

Objective:

Design an AI-Human collaborative algorithm that minimizes risks (e.g., plagiarism, overreliance) and maximizes gains (e.g., creativity, problem-solving, critical thinking) in educational settings.

1. Conceptual Framework

Players:

Human Learner (Student or Faculty)

AI Assistant (e.g., ChatGPT, other LLMs)

Strategies:

Each player has a set of strategies they can choose from in the educational interaction. These choices affect both academic integrity and learning depth.Payoff Function:

U=Maximize (L+C+P+T)−Minimize (PL+AL)U = \text{Maximize } (L + C + P + T) - \text{Minimize } (PL + AL)U=Maximize (L+C+P+T)−Minimize (PL+AL)

Where:LLL = Learning

CCC = Creativity

PPP = Problem-solving

TTT = Critical Thinking

PLPLPL = Plagiarism

ALALAL = AI Lazy Syndrome

2. Algorithmic Structure (Python)

def AI_Human_Collab_MinMax(state, depth, maximizingPlayer): if depth == 0 or is_terminal_state(state): return evaluate(state) if maximizingPlayer: # Human is optimizing for authentic learning maxEval = float('-inf') for action in human_valid_actions(state): new_state = simulate_human_action(state, action) eval = AI_Human_Collab_MinMax(new_state, depth - 1, False) maxEval = max(maxEval, eval) return maxEval else: # AI is minimizing risk of misuse while offering assistance minEval = float('inf') for response in ai_response_options(state): new_state = simulate_ai_action(state, response) eval = AI_Human_Collab_MinMax(new_state, depth - 1, True) minEval = min(minEval, eval) return minEval

3. Application Stages

Stage 1: Input Processing

Human: Requests help (e.g., “write essay,” “solve problem”)

AI: Analyzes the prompt for intent, risk of misuse

Stage 2: Ethical Filtering & Prompt Shaping

AI: Responds with scaffolding or critical questions, not just answers

Human: Must synthesize and reflect (i.e., forced into thinking loop)

Stage 3: Feedback Loop

AI gives:

Suggestions

Alternative perspectives

Comparison models

Human reflects and rewrites:

Annotates what was learned

Justifies choices

Documents how AI was used

Stage 4: Evaluation

The system calculates:

Depth of transformation

Degree of synthesis

Human-generated vs. AI-generated ratio

Plagiarism or pattern detection

4. Evaluation Function evaluate(state)\text{evaluate(state)}evaluate(state)

VariableWeightEvaluation CriteriaLearning LLL+3Evidence of comprehension and articulationCreativity CCC+2Novel ideas, perspectives, or analogiesProblem-Solving PPP+2Logical reasoning, steps followed, real-world relevanceCritical Thinking TTT+3Counterarguments, evaluation, ethical reflectionPlagiarism PLPLPL−5Direct copying or uncited paraphrasingAI Lazy ALALAL−4Overreliance on AI without human synthesis

Total Score:

Score=3L+2C+2P+3T−5PL−4AL\text{Score} = 3L + 2C + 2P + 3T - 5PL - 4ALScore=3L+2C+2P+3T−5PL−4AL

5. Example Scenario

Prompt: “Write a 500-word essay on climate change.”

AI Output: Provides outline + suggestions + key sources (not the full essay)

Human Output: Writes the essay, integrates critical perspectives, cites AI as inspiration

Result:

High on L, T, P, and C

Low on PL and AL

Final evaluation score = High authenticity and integrity

6. Implementation Implications

Stakeholder Strategy

Faculty: Create assignments with reflection checkpoints

Students: Use AI for scaffolding, not submission

Developers: Implement guardrails + usage audits

Institutions: Set policies for responsible AI use

7. Reflection

A Min-Max algorithmic mindset encourages an optimal collaboration where the human maximizes educational gain, and the AI minimizes ethical and cognitive risks. In this shared responsibility model, AI becomes a cognitive amplifier, not a crutch. This partnership, guided by transparent heuristics, leads to deeper learning, greater originality, and stronger academic integrity.